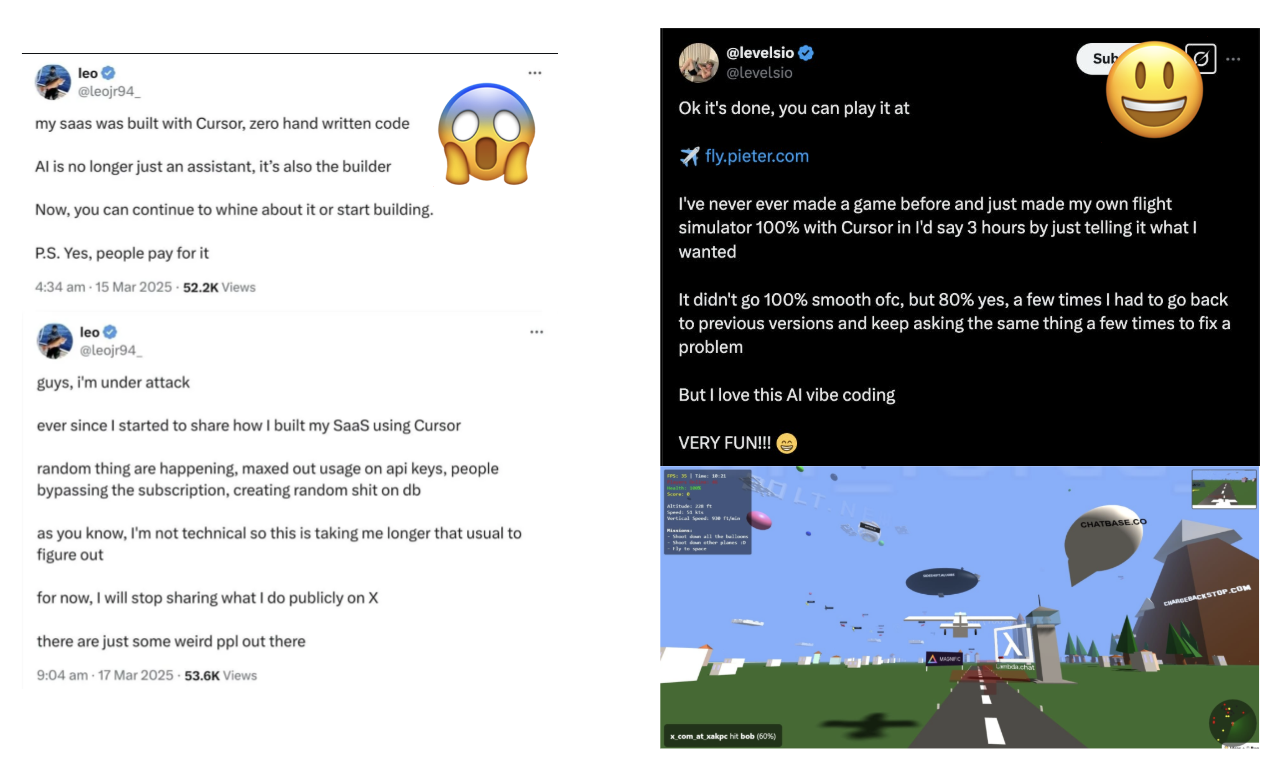

Agentic coding is on the rise, and the productivity gains from it are real. There are tons of success stories of non-programmers "vibe coding" SaaS apps to $50k MRR. But, there are also precautionary tales of those same SaaS apps leaking API keys, racking up unnecessary server costs, and growing into giant spaghetti code that even Cursor can't fix.

It's not that LLMs can't code, actually, LLMs are very good at many coding tasks. The issue is that LLMs can't code at scale. Go to any "vibe coding" app (Cursor, Bolt, etc) and ask it to create a simple game. If you've never experienced this, it will shock you how easily it's able to go from zero to a fully working prototype of a game. But then ask it to turn it into a live multiplayer game, or add character customization, or any other change that requires a big refactor, and your game will start to fall apart.

Why Does Vibe Coding Collapse at Scale?

Before diving into the principles, we have to talk about why LLMs can't just change massive code bases.

Misinterpreting Your Requirements

If your prompt is vague, LLMs won't ask for clarifications. They will take creative liberties with your requirements and compulsively complete any task they are given. This is a feature; not a bug, since most users of AI just want fast results. But, if you're not explicit, this quirk will introduce unwanted code.

Compounding error

Even if AI Agents were 99% perfect, that 1% error rate compounds the longer you let it go unchecked. Slightly buggy code written on top of slightly buggy code creates exponentially buggier systems. The better path is to continuously course correct them into the direction you are comfortable. So yes, you still need to be a good programmer to spot and avoid risky patterns, vibe coding is not a substitute for good instincts.

Current Technical Limitations

Despite rapid improvements, today's models still face fundamental constraints. Context windows limit how much code models can "see" at once, while logical reasoning flaws mean they excel at common patterns but stumble on novel problems. This problem is precisely why we need principles now. As these models improve, their bugs will become more subtle and widespread, making detection harder without proper guardrails in place.

At WHOOP, every member of the engineering team has access to Cursor. In using it, we've seen firsthand where it falls short and where it's consistently good. To make the most of it, we've developed a set of principles to get the most from AI writing code while remaining in control of our code base.

5 Principles of Agentic Coding

We call this GUSTO coding (instead of vibe coding). It's an acronym for the 5 principles.

Git: Commit Often

AI writes code with total confidence, even when it's wrong. It can quietly edit files you never touched, break features that were green moments ago, and steer the task in a direction you didn't ask for.

That's why you need to checkpoint constantly. Commit after every meaningful step, so you can roll back when things go wrong. Tools like Cursor's side-panel make this a one-click habit and let you inspect the diff before you hit Commit.

If the agent's output feels off, stash it and start over. Refine your prompt and have it go again, the AI won't mind that you threw away its work, and you'll move faster in the end.

Understand Every Change

LLMs write code very fast, and it can feel overwhelming to review it all. But if you're not keeping track of the incremental changes, over time you will lose control of your codebase. Make sure you have a good grasp of what has changed before you hit the commit button.

Review diffs in tools you're comfortable with (IDE diff viewers, GitHub PR view, or specialized Git tools). If you're not sure about a change, ask the AI why it made that choice. Sometimes you'll learn something new, or it might correct itself. If the explanation doesn't make sense, don't proceed.

Watch for these red flags:

- New dependencies and technologies you didn't ask for.

- Surprise changes to .gitignore or build files.

- Generated binaries sneaking into commits.

- Changes to files outside the scope of your request.

Small Tasks

It's very tempting to let a big task rip, but in my experience this almost always loses. This varies by task, for instance when you're starting from scratch it tends to get pretty far. But when you're making changes in an existing codebase it's better to take an incremental approach.

If you have a large change one tip is to ask the agent to outline a plan into a PLAN.md file with a detailed task list. Refine the plan with the agent, and once you're happy with it, tackle each task one at a time.

Be very explicit about constraints: "Only modify [file]," or "Do [task] without modifying [function]." Clear success criteria for each subtask keeps both you and the AI on track.

Test Everything

Think of tests as automatic feedback for the agent. The AI can look at the code to inspect if it works, but without running the code it can't confirm if it works. Write lots of unit and integration tests, with AI this is now far less laborious.

Having a well tested codebase helps with the rest of the principles. It's easier to catch mistakes if tests fail, and reading tests is often faster than understanding the implementation. The real speed boost emerges once you have a well tested codebase and your agent can self-correct.

Tip: Linters and type-checkers also count as mini-tests, and most agentic coders like Cursor already auto-fix these issues as they write code.

Organize Your Codebase

Coding agents don't care about the overall cleanliness of the codebase, they are just focused on completing the task at hand. As you accumulate AI-written code, seemingly small changes could turn out to be large refactors. As with any large codebase it's a good idea to keep things organized and modular to avoid these big refactors.

You can use your downtime while you wait for a task to be thinking ahead about how you want to organize your project. Leverage Cursor rules or AGENTS.md to guide it towards your desired approach. And spend a few cycles working with it to write good documentation directly in your repo. This pays dividends overtime since you won't need to constantly repeat yourself in prompts and you'll have to correct it less frequently.

Wrapping Up

GUSTO coding is a recipe for confidence-at-speed. By following these five principles, you can harness the power of AI while maintaining control and delivering high-quality software.

- Git commit to create checkpoints you can roll back to.

- Understand the changes made to stay in sync with the agent.

- Small tasks will make this feedback loop easier on you.

- Test everything to help it self-correct.

- Organize your codebase constantly to maintain control.

At WHOOP, we're not just adopting AI; we're pioneering its use to build cutting-edge features that enhance the lives of our members. We're navigating this exciting new AI world, learning and adapting just like you. If you're passionate about building the future of human performance and want to be part of a team that's pushing the boundaries of technology, we invite you to join us. Let's learn and grow together.