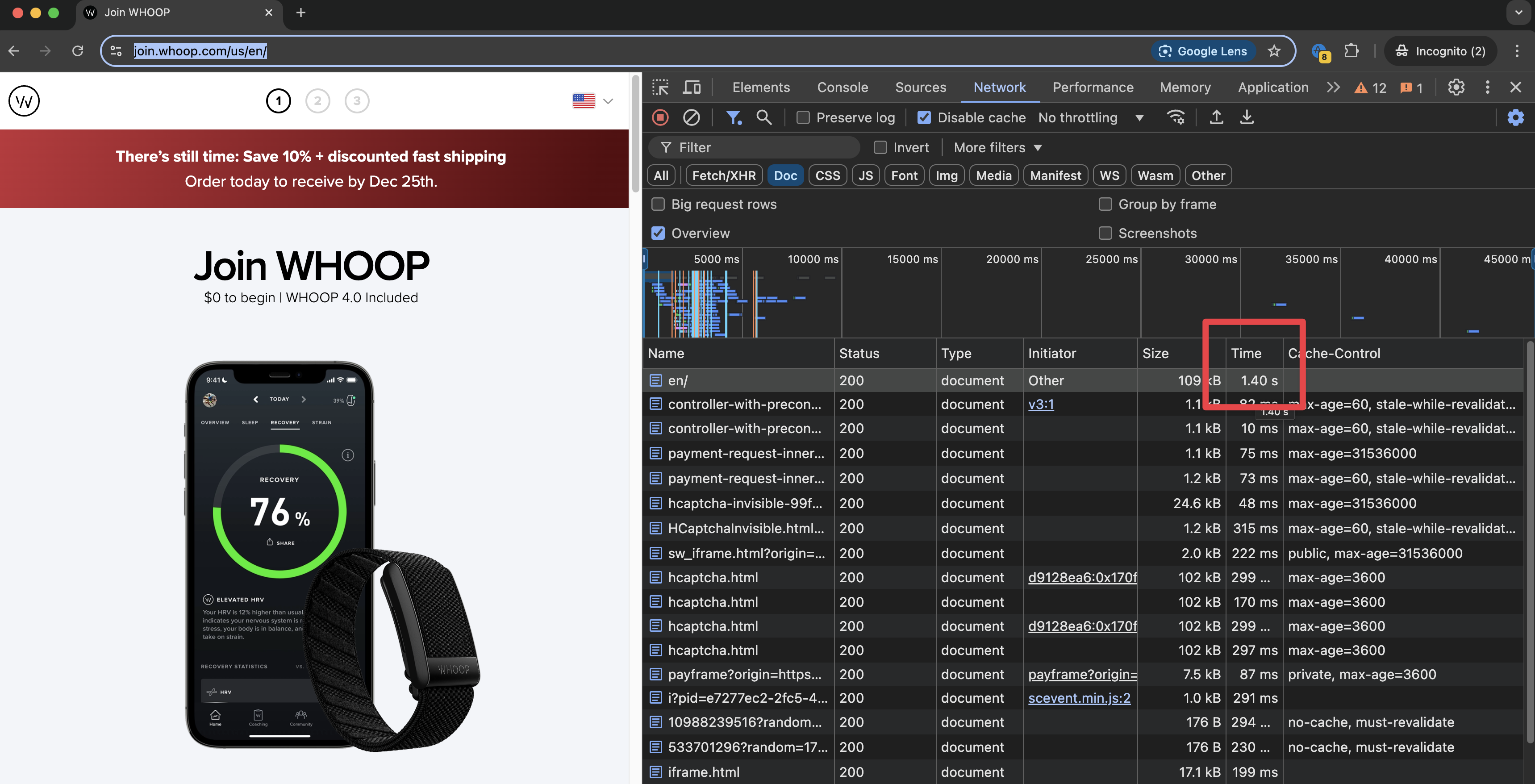

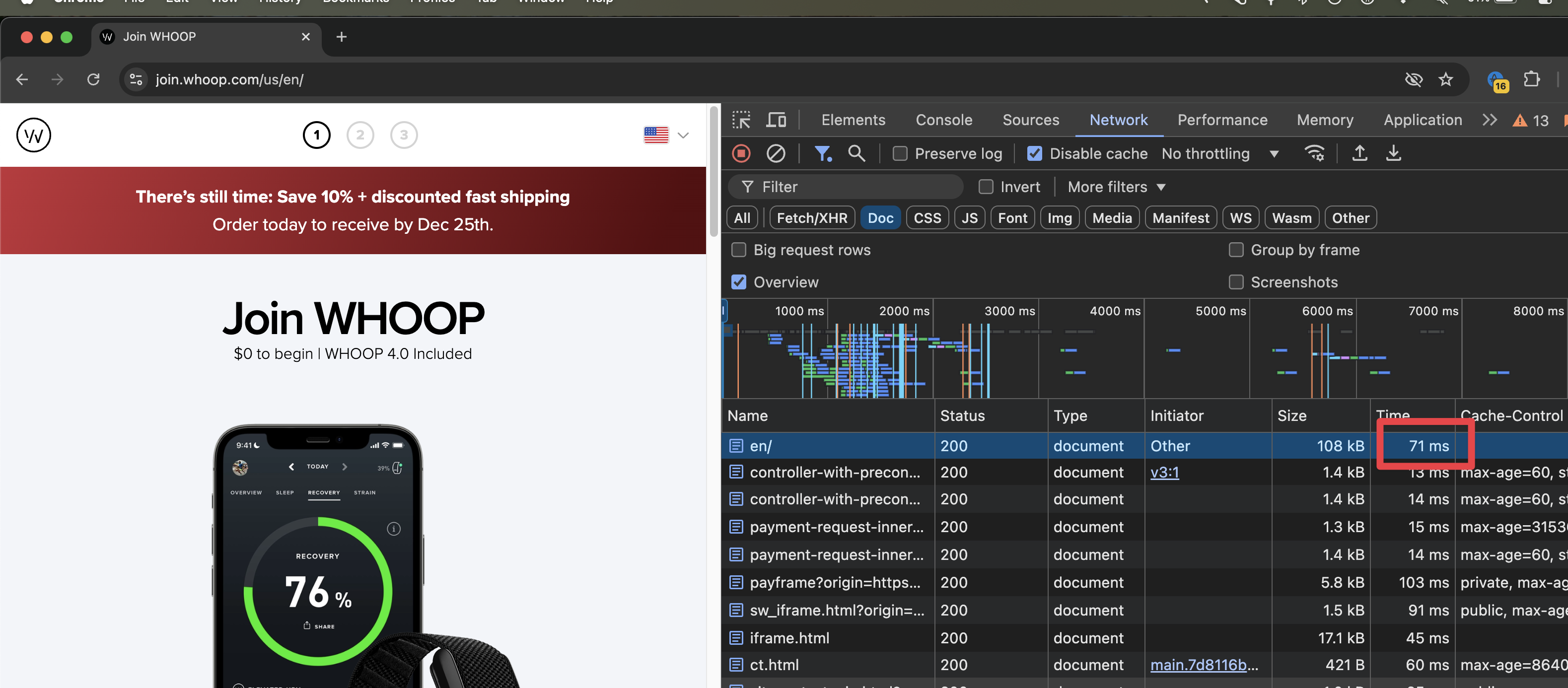

We are excited to share a small trick that can help immensely speed up your web apps. Last holiday season, we implemented some caching and the results surprised us. We expected some improvement but the load time for our checkout app improved beyond our expectations. It went from 1400ms to 71ms - that’s 2 orders of magnitude difference!

Before

After

Cache your HTML with short Time To Live (TTL)

Before we go any further, we’ll divulge the trick. The rest of the article will break it down and go into more detail so you understand the context in which we are using this and how you can implement it as well.

Without further ado, the key to this successful reduction of load time is HTML caching with a short Time To Live (TTL). By combining the power of caching but invalidating the cache within a reasonable enough time, we were able to extract a lot of performance gains while not worrying about staleness (thanks to the short TTL).

Let’s define Load time

Load time, as we are referring to in this article, is defined as the time it takes from request initiation to receiving the complete HTML response.

Time to First Byte (TTFB) is helpful to think about this but not entirely accurate. TTFB measures the time it takes to receive just the first byte, whereas we are talking about the time it takes for the entire HTML response to load. We would also not call it the page load time because that involves downloading all relevant assets. The most accurate way to think of it is how long it takes the browser to receive the HTML response for a URL.

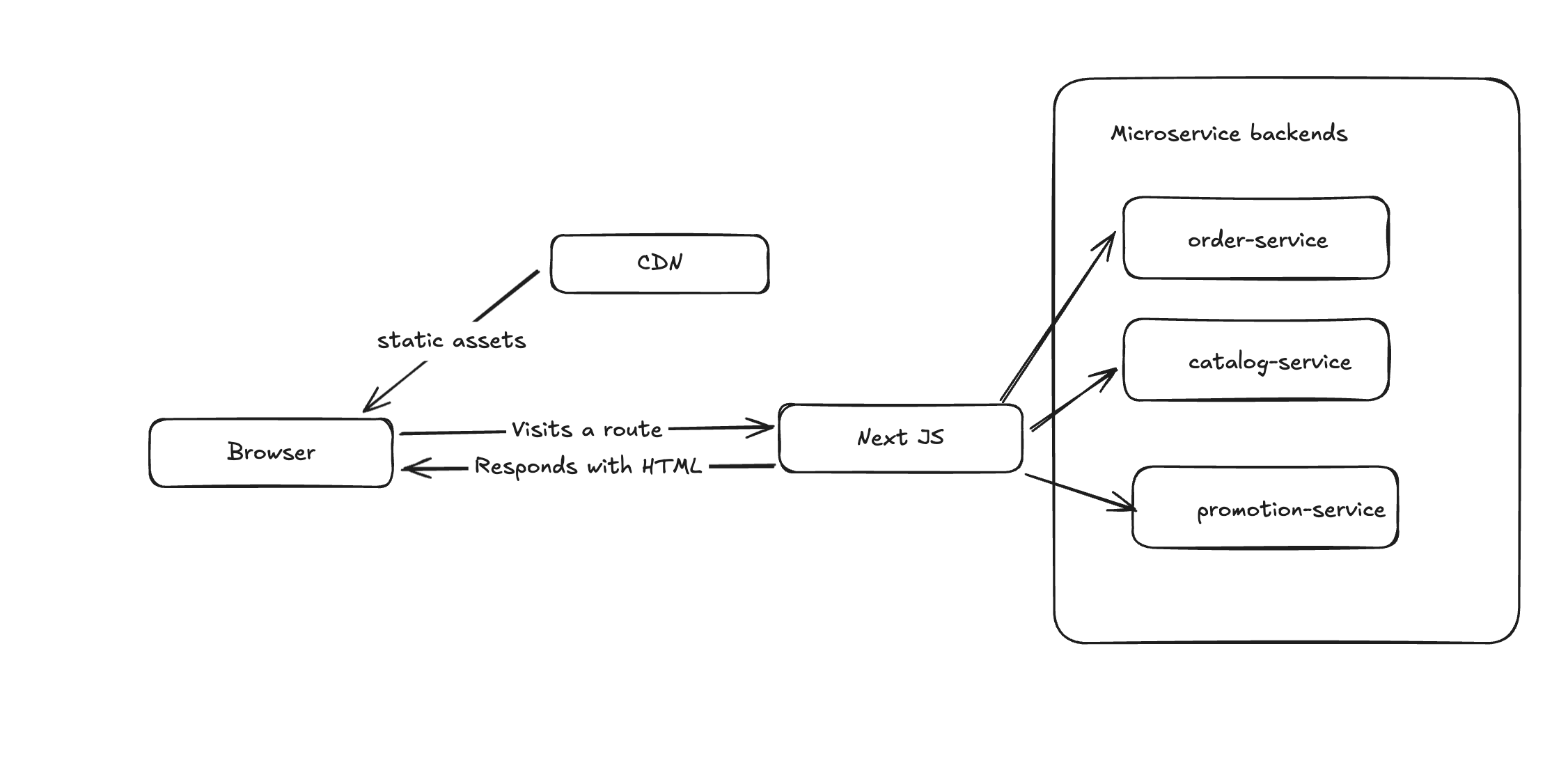

Our setup

Our checkout application is a React, Next JS app with a mix of server side and client side components. The server talks to a bunch of microservices before producing an HTML response. Most of those requests can run in parallel with very few dependent queries that need to run sequentially.

The need for caching arose when we had a code freeze during last year’s holiday season. This is typically the time of the year when there is a lot of traffic to our site attributed to the holiday season. As most companies do, we have a code freeze during this period – when for a few days, we don’t roll out any changes unless they are critical bug fixes.

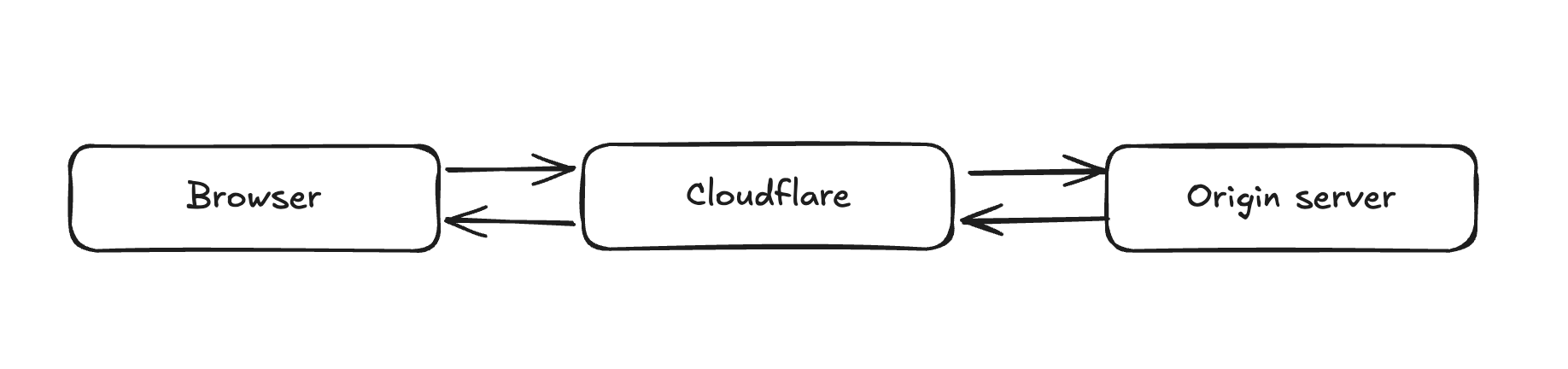

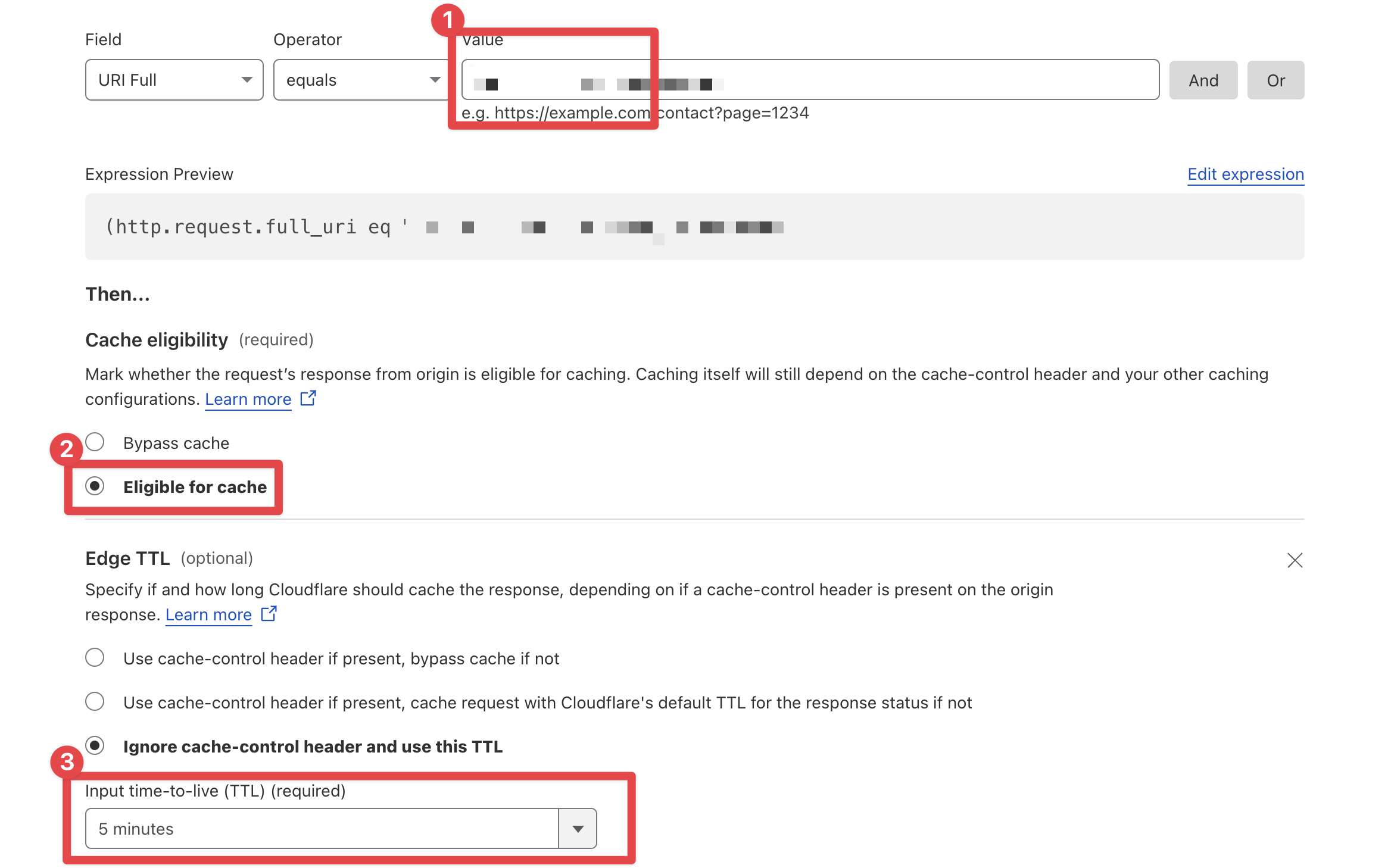

This provided an opportunity to leverage caching. All of our URLs are fronted by Cloudflare, so we can easily add some caching rules based on the URL in the Cloudflare dashboard. The same can be done in Akamai or any similar providers.

Since all requests get serviced by a proxy layer before it hits the origin server, it’s easy to intercept and set caching rules at the request level. The request only goes to the origin server if this layer allows it pass through. If you don’t have such a layer, you can leverage browser caching to achieve the same effect. More on that later.

Since we knew there were no changes being deployed, it was easy to implement caching. Our caching rule was, if the route matches a certain criteria (eg. the index route for the checkout application), then the page is cached for 5 minutes. You can do this with Cloudflare by adding a Caching rule like below.

You don’t usually cache HTML

Conventional wisdom dictates you never cache your HTML. You cache all your static assets (JS, CSS, images). Typically you have a build process that fingerprints your static assets using content-based hashing. These are then edge-cached on a CDN. Every time the HTML is served from the origin server and the static assets may or may not be served from a CDN. Ideally, this is also cached in an immutable fashion with a large TTL. As long as the file’s content never changes, you keep getting served the file from CDN. On a subsequent build, if the file changes, a new fingerprint is generated for the file. The HTML response will now be modified to reflect the new asset URL with the new fingerprint. This is why you don’t cache the HTML. As long as the HTML is “fresh”, you are guaranteed you will never serve stale content to your users.

With JAMStack, there is no server. All pages get built out as HTML during build time, so every route can be treated as a static HTML asset that is edge cached. JAMStack relied on deployments as the source of truth - as long as you don’t have a new deployment, it will keep serving cached content from the edge. This works fine for static sites that don’t rely on a server to respond to requests. Or sites without any dynamic content such as e-commerce cart, A/B tests, feature flags.

In our case, we were a full-blown e-commerce app and run several A/B tests so there’s no guarantee that the HTML document will be deterministic based off of a deployment. This is why we recommend the TTL to be short. The exact timing really depends on your use case but we have found that somewhere between 1 minute and 20 minutes is reasonable. In our case, we went with 5 minutes and works really well. The HTML gets regenerated every 5 minutes and edge-cached. For all users in the next 5 minutes, they will receive the cached copy.

Getting buy-in

While the technical aspect of this is simple, it involved getting buy in from several folks who were affected by this caching. Such as the marketing team, including content authors and editors who use a CMS to publish or edit content on the site. It is imperative that they know to expect a slight delay between publish and it reflecting on the live site. It also requires buy-in from your product managers and QA team who understand how caching works and don’t panic or raise false bugs when they see issues that auto-rectify in a few minutes. These factors help in determining what’s the right duration for TTL.

How to implement

One of the first things to consider when using this technique for dynamic sites is to ensure that the server rendered HTML does not contain anything specific to a user. Meaning you want to cache the guest experience of a page not the personalized version of a page.

You don’t want to cache the logged in version of a page that reflects a user’s name or cart and then display that to every other user for the next few minutes 😱. This can be achieved in 2 ways.

- Only cache routes that are public or have no dynamic content / personalization - landing pages and blog posts are ripe for this. Account pages or order status pages not so much.

- With a little of foresight, you can architect your applications in a way that anything that requires a user’s authentication information is client-side. So the server rendered HTML is agnostic to any user and can be safely cached. The client-side javascript handles dynamic content such as personalized coupon codes or fetching the user’s cart.

Cache invalidation

The TTL alone is not sufficient for invalidation. Sometime you may run into cases where you want to bust cache manually or when you have certain actions such as new content published on a CMS, an update to the catalog that repeatedly require cache busting. In those cases, you can use the API to bust the cache. For example, after each deployment, you can trigger a cache invalidation call. You can do the same after content publish events in your CMS. Here’s a list of events that can trigger webhooks in Contentful.

Leveraging Cache-Control headers

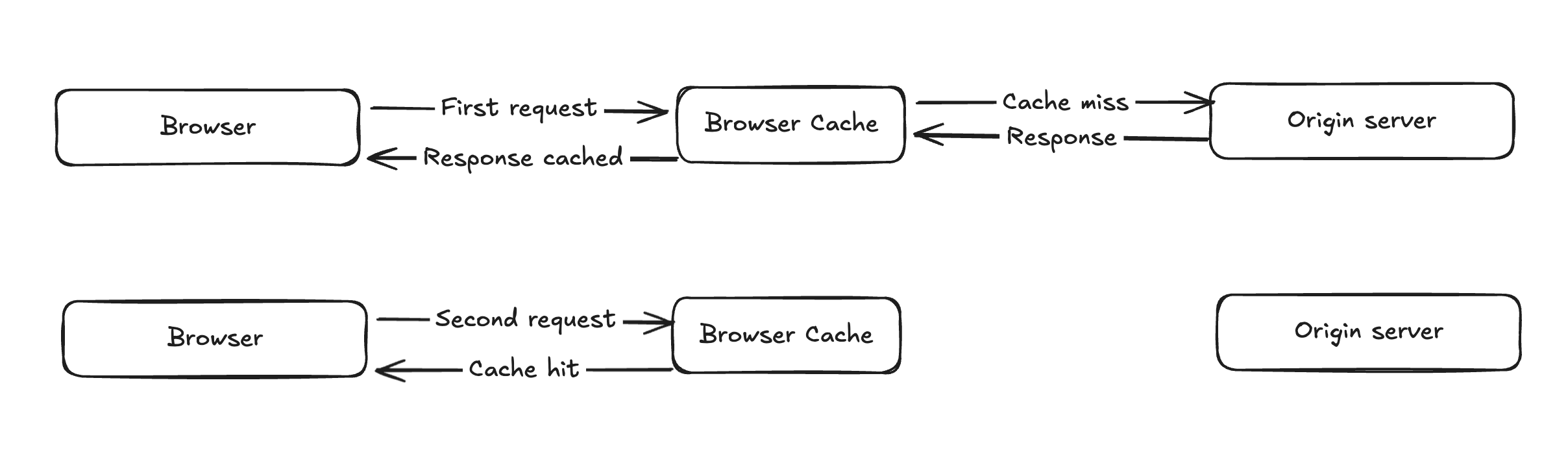

If you are running a site that doesn’t have Cloudflare or Akamai layer in front of it, but you are running a server, you can still benefit from this but you have to take it a step further. Use Cache-Control headers in your response to instruct the users browser to cache the document. Adding the following header will cache your HTML for a minute. Here’s a really good article on understanding cache control headers.

Cache-Control: max-age=60

The performance gain with this is even faster because the second load will be loaded from cache at sub millisecond speeds. But keep in mind that every user will need to warm their cache themselves meaning the first load will be an original load from the origin server and the subsequent loads until TTL expires will be served from the user’s browser cache. It won’t even hit the CDN edge location so this is blazing fast.

Advantages of this approach

We have found that in addition to faster load times, there’s a benefit of lesser load on the origin servers, especially during peak times. Traffic spikes are easily handled without needing to scale our servers.

Conclusion

By leveraging short TTL HTML caching, we were able to drastically improve our checkout app’s load times from 1400ms to 71ms. This simple yet effective technique allows us to strike a balance between performance and freshness, ensuring that users get a lightning-fast experience without serving stale content.

While traditionally, HTML caching is avoided due to concerns about outdated content, we demonstrated that a well-calibrated short TTL and strategic cache invalidation can make it a viable solution even for dynamic e-commerce applications. Implementing this approach requires careful planning, buy-in from stakeholders, and thoughtful consideration of which pages can safely be cached. However, when done right, the benefits are immense—not just in performance but also in reducing the load on origin servers, making scaling more efficient.

If you're looking for a simple yet powerful way to dramatically speed up your web app, consider caching your HTML with a short TTL. The results might surprise you! 🚀