When I joined WHOOP as a Backend Software Engineer co-op, I was excited about AI but mostly in the abstract. I had seen demos and read about emerging models. What I had not experienced yet was what it takes to bring AI into a real product in a way that feels trustworthy, secure, supportive, and genuinely helpful to members. At WHOOP, I had the chance to work on exactly that. Over my co-op, I contributed to three major areas of the AI experience: expanding AI across the app, designing onboarding for new members, and developing proactive insights after workouts. Along the way, I learned that the hard part is not just getting AI to “work”, it is making it feel human, responsible, and aligned with the mission to unlock human performance.

7 篇文章 含有標籤「ai」

檢視所有標籤We Shipped GPT-5.1 in a Week. Here's How We Validated It.

The latest model isn't always the best model for every use case.

Before we can chat about GPT-5.1, we have to talk about GPT-5. When GPT-5 dropped, we were excited. Our evals showed GPT-5 was clearly more intelligent and we quickly rolled it out for our high intelligence agents and high-reasoning tasks where that capability shines. But for our low-latency chat, GPT-5's minimal reasoning mode actually underperformed GPT-4.1 on our evals. Different use cases, different results. Our per use case evaluations allowed us to immediately make these determinations.

We shared those findings directly with OpenAI in our weekly call with them and at Dev Day. At DevDay, we spent time chatting with their engineers walking through our eval results and discussing what we needed for latency-sensitive applications like chat. That collaboration mattered.

GPT-5.1 shipped with a new none reasoning mode that addressed exactly what we'd flagged. We ran our evals again, and this time the results were different. Within a week, we had validated it against over 4,000 test cases, A/B tested in production, and rolled it out to everyone.

22% faster responses. 24% more positive feedback. 42% lower costs.

Here's exactly what we evaluated, what we found, and why we shipped.

Evals First: Validating GPT-5.1

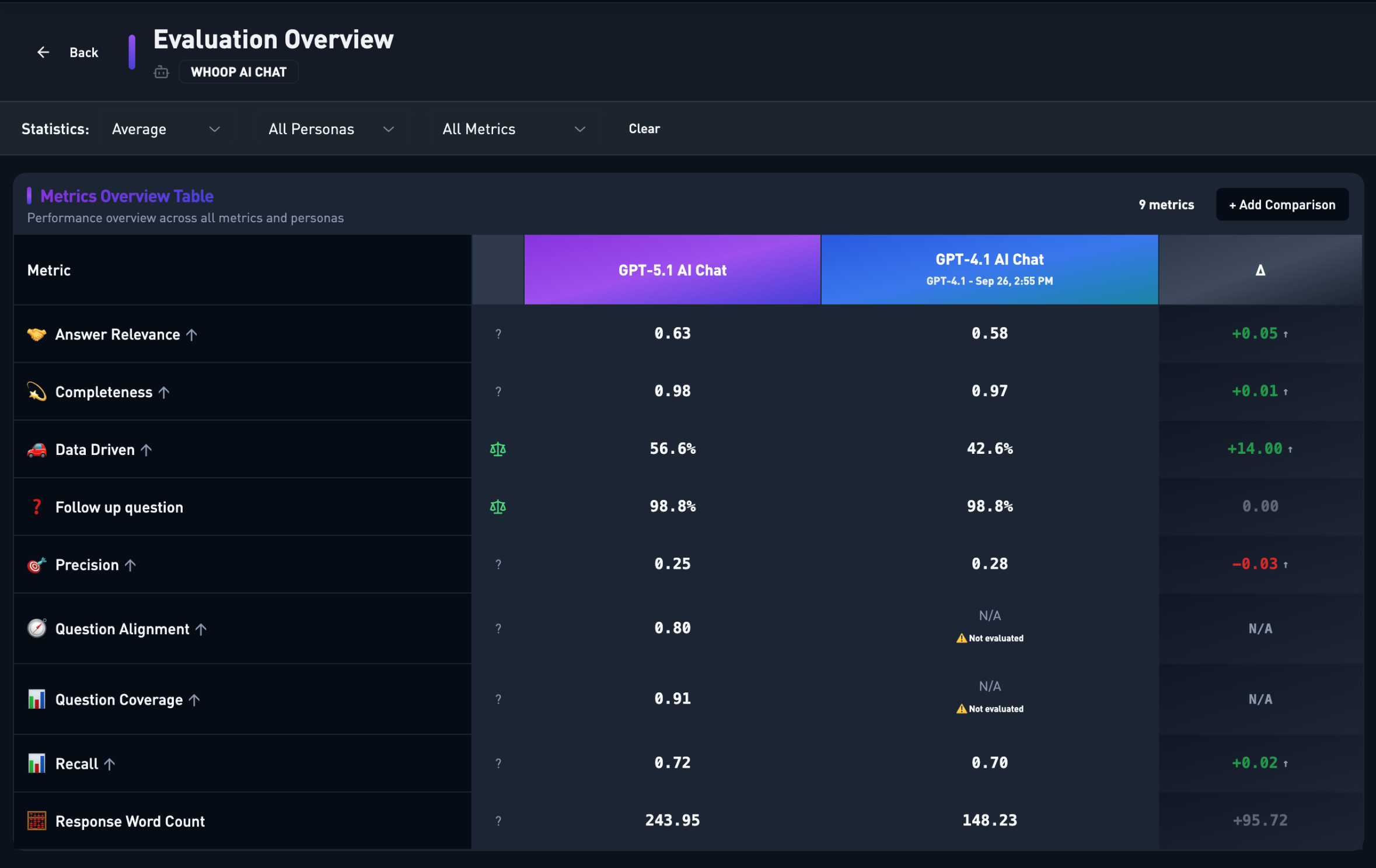

Before touching production, we ran our full evaluation suite against GPT-5.1, comparing it to our GPT-4.1 baseline for our core chat use case. Here's what we found:

Our internal evaluation dashboard comparing GPT-4.1 (baseline) against GPT-5.1 across over 4,000 test cases

| Metric | GPT-4.1 Baseline | GPT-5.1 | Change |

|---|---|---|---|

| Answer Relevance | 0.58 | 0.63 | +0.05 |

| Completeness | 0.97 | 0.98 | +0.01 |

| Data Driven | 42.6% | 56.6% | +14.00 |

| Recall | 0.70 | 0.72 | +0.02 |

| Follow-up Question | 98.8% | 98.8% | 0.00 |

| Precision | 0.28 | 0.25 | -0.03 |

| Response Word Count | 148.23 | 243.95 | +95.72 |

The headline numbers were impressive: Answer Relevance up ~5%, Data-Driven responses up 14 percentage points, and Recall improved by 2 points. GPT-5.1 was consistently surfacing more personalized health insights. The "Data Driven" metric measures how often responses incorporate actual individualized data rather than generic advice—jumping from 42.6% to 56.6% is a game-changer for personalized coaching.

What's driving that Data Driven jump? Significantly higher (and more accurate) tool call usage, primarily focused on AIQL queries—our internal query language for pulling individualized data like sleep, strain, and recovery. More data queries mean more personalized context in every response.

We measured answer relevance, recall, data usage, tool accuracy, and conversation dynamics. There are trade-offs: GPT-5.1 shows a slightly lower precision score (0.25 vs 0.28) and produces somewhat longer responses, even after light prompt tuning to reduce verbosity. But those extra tokens are doing useful work. The model infers intent better and explains why, not just what. The added detail shows up primarily in complex coaching conversations that deserve deeper explanation, not in simple one-off questions. When we reviewed individual traces, the trade-off was clearly worth it.

The Real Test: Production

Evals told us GPT-5.1 was ready. But evals only tell part of the story—the real test is always production.

We rolled GPT-5.1 out to roughly 10% of production load as a controlled A/B test, monitoring latency, tool usage, cache hit rates, user feedback, and token spend. The live data told an even better story than our evals predicted:

22% Faster Responses

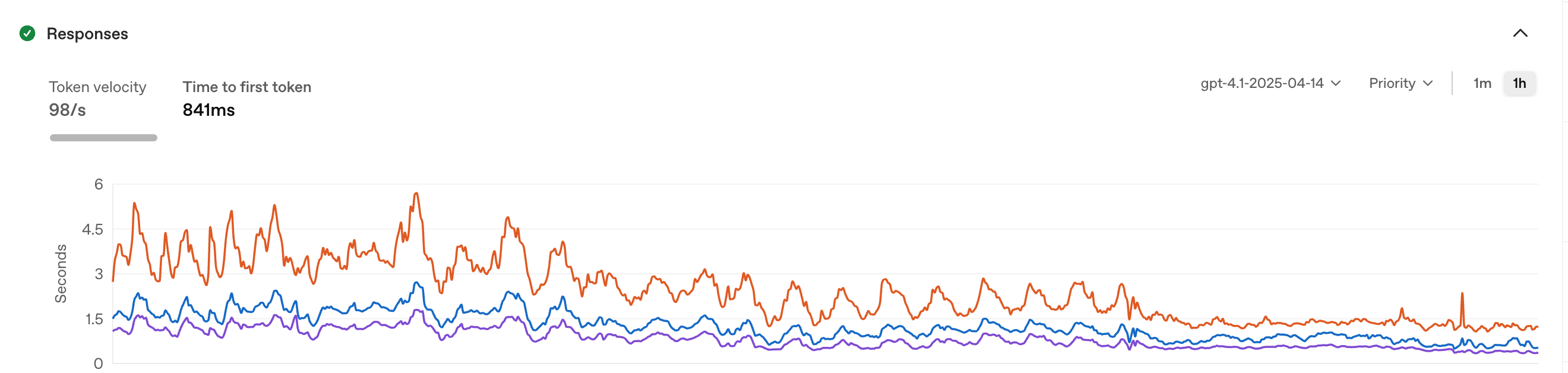

Here's what surprised us: despite providing more comprehensive answers and using tools more thoroughly, GPT-5.1's time to first token dropped significantly. This defies the usual trend where "smarter" models are slower.

| Percentile | GPT-4.1 | GPT-5.1 | Improvement |

|---|---|---|---|

| p50 (median) | 1.53s | 0.98s | 36% faster |

| p90 | 2.88s | 1.96s | 32% faster |

| p99 | 5.83s | 4.55s | 22% faster |

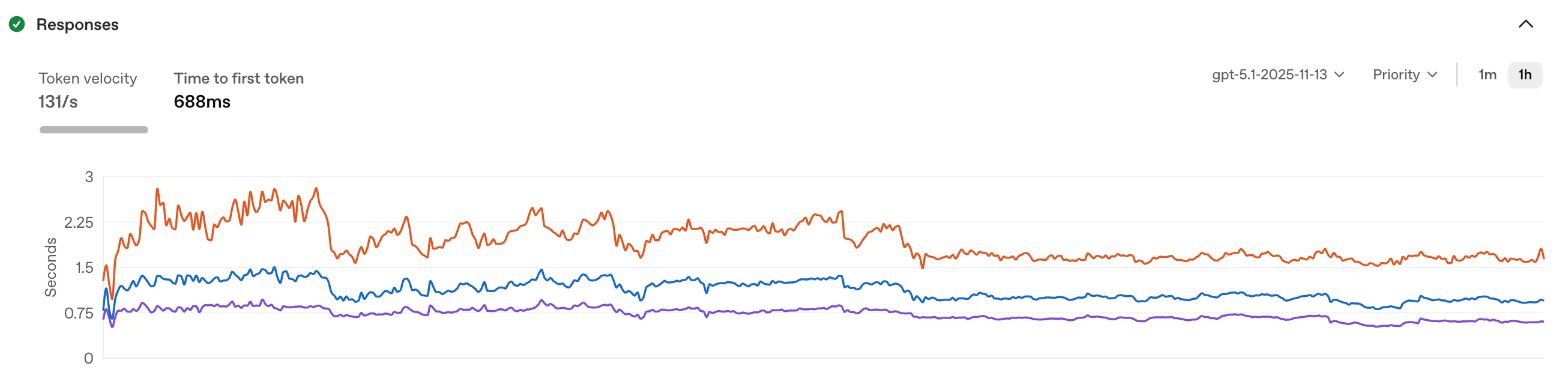

The median response now starts streaming in under a second. The model itself is faster: 34% higher token velocity (98/s → 131/s) and 18% faster time-to-first-token (841ms → 688ms). That's not our optimization. It's a better model on better infrastructure.

GPT-4.1 baseline: 98 tokens/sec, 841ms time to first token

GPT-5.1: 131 tokens/sec, 688ms time to first token

Amplified by Better Caching

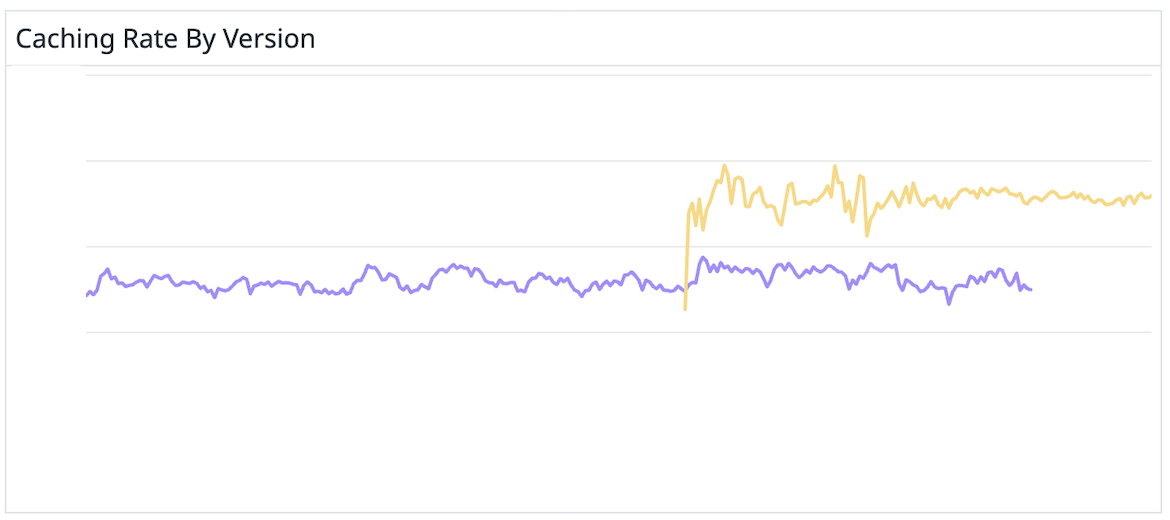

On top of the model improvements, we saw dramatically improved caching efficiency.

Cache hit rates improved by 50% with GPT-5.1

OpenAI's improved GPT-5.1 infrastructure meant a 50% improvement in cache hit rates. We didn't change anything on our end; the model just automatically has a higher cache hit rate for us.

Cached tokens are 10x cheaper, and our coaching experience has an extremely high input-to-output token ratio—lots of context for every answer. That combination makes caching efficiency a massive cost lever.

24% More Positive Feedback

Users prefer 5.1 over 4.1 with a 24% increase in positive feedback (thumbs up) and a corresponding decrease in negative feedback. Faster responses plus better answers equals deeper engagement.

42% Lower Token Costs

If GPT-5.1 produces wordier responses (+96 words on average), how did costs go down? That 50% caching improvement. Serving significantly more requests from cache at 10x lower cost, combined with cheaper overall prices than 4.1, drove lower total cost.

At scale, these efficiency gains translate to significant savings we can reinvest into building better AI experiences.

From 10% to 100% Rollout

Based on these results, we rolled GPT-5.1 out to 100% of production traffic. The metrics have held steady, confirming what our evals and A/B test predicted.

What We Learned

A year ago, evaluating a new model would have taken weeks. Now we can eval in hours and ship the same day.

When GPT-5 came out, we knew within hours it wasn't the right fit for low-latency chat. When GPT-5.1 came out, we knew within hours it was. That speed comes from investing in use-case-specific evaluation infrastructure. As model release cadence accelerates, this kind of rapid validation isn't optional. It's how you lead.

Our partnership with OpenAI made this launch better and helped make 5.1 the model we needed. Our in-house eval framework gave us the ability to validate any new model against our specific use cases, catch regressions before they hit production, and ship with confidence.

With 56.6% data-driven responses (up from 42.6%), faster responses that make coaching feel like a conversation, and lower costs we can reinvest into building better AI experiences.

If you want more context on how we build and ship agents at WHOOP, check out our earlier post on AI Studio, "From Idea To Agent In Less Than Ten Minutes".

What's Next

We're not done. The ultimate metric isn't eval scores or even user feedback. It's whether better AI responses lead to better health outcomes. That's where we're headed.

The bottom line: We shipped a new model in a week, validated by our custom eval framework. 22% faster responses, 24% more positive feedback, 42% lower costs. That's what rapid iteration looks like.

Want to build the future of health and performance with AI? WHOOP is hiring engineers, product managers, and AI researchers who are passionate about using technology to unlock human performance.

From Idea To Agent In Less Than Ten Minutes

At WHOOP, we've been at the forefront of applied AI not by competing in the model race, but by building on top of it with our unmatched physiological data and domain expertise. While others were still debating the potential of LLMs, we had already spent years understanding how to integrate these models with real-world systems and data pipelines.

This early investment paid dividends. By the time LLMs started to become truly powerful, we weren't starting from scratch. We had years of hard-won expertise in prompt engineering, evals, model selection, and most critically, applying AI to physiological insights with robust privacy and security guardrails.

AI Studio

In the years we spent building LLM agents, we built all kinds of one-off scripts and tools that could remain flexible while we invent new ways of doing things and allow us to keep up with the gen-ai-model-of-the-month release cadence we were seeing. Integrating new data sources and rearranging logic to provide the right context to the LLMs meant that even minor changes to the way our core agents work could take weeks.

When coding agents Cursor and Claude Code came out and the coding model race really heated up, we realized we could finally build the LLM Agent IDE we'd always dreamed of. We already had a strong understanding of what the core abstractable pieces of LLM agents were (system instructions, model, and tools), we just needed to build a simple backend to store it and a simple frontend to manage it. In a week or so, we had built the first iteration of AI Studio.

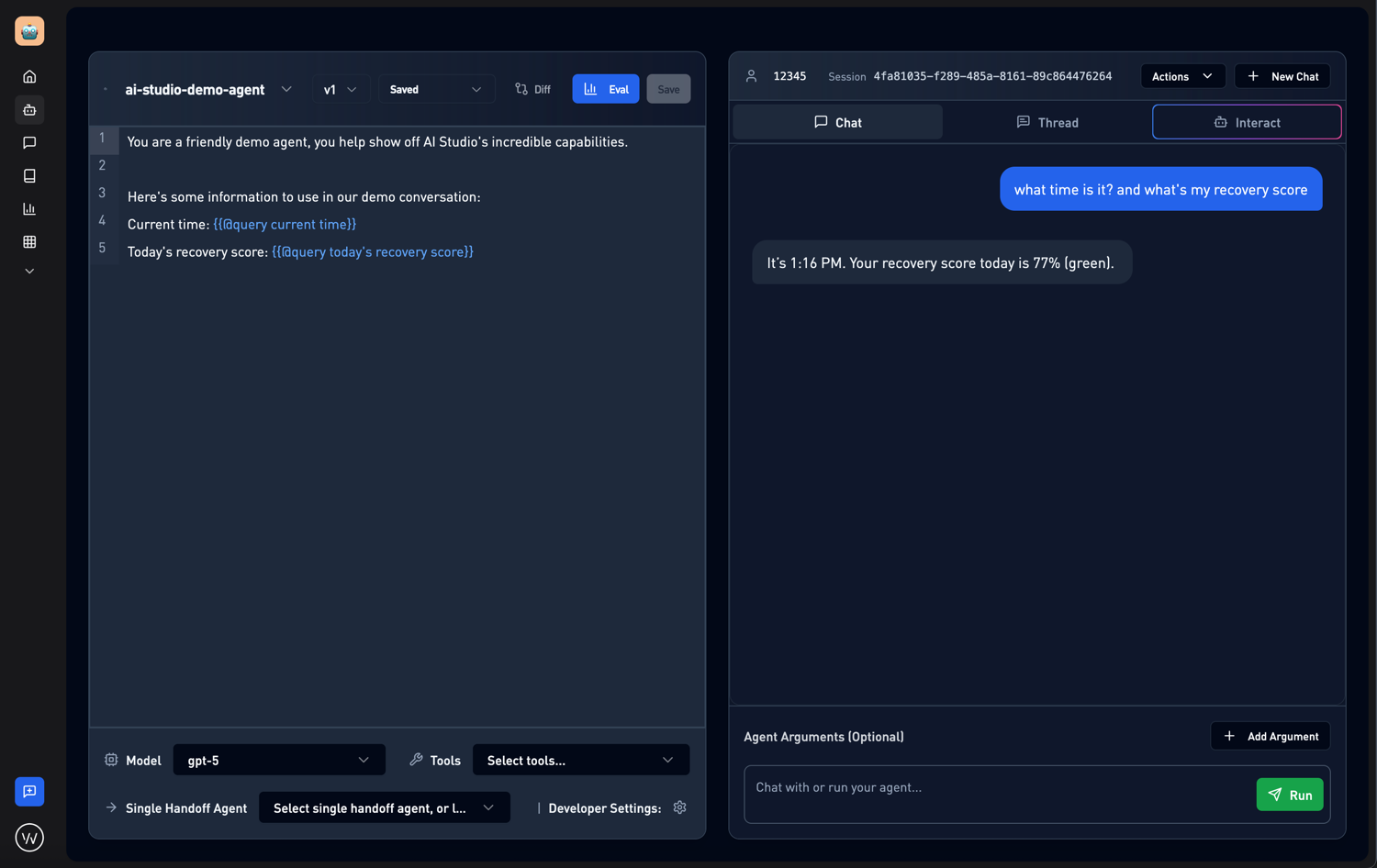

The AI Studio agent editor showing system prompts, model selection, and tool configuration

It has a lot more features today than it did back then, but the original version nailed down the core need: we could now go from "what if we build an agent that does X" to actually testing that agent in less than 10 minutes. Often times someone will mention a really great idea at the start of standup and then by the end of it they had built a working prototype and sent it to all of our phones to try out. This level of rapid iteration has unlocked a creativity and pace we have never seen before. More importantly, it capitalizes on the nature of generative AI: 95% of the value can come in the first 5% of effort, and that last 5% of polish will take 95% of the effort. So we prioritize trying a lot of ideas and failing fast, never wasting time polishing something that won't work.

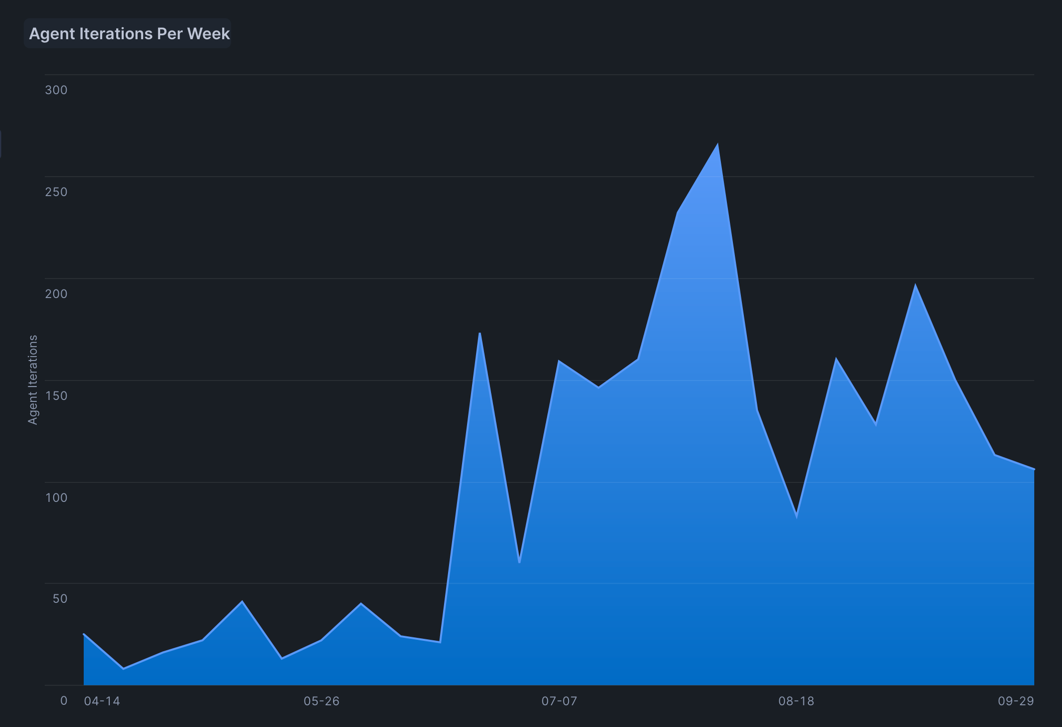

Growth of agent iterations over 6 months

After 6 months, we've created and tested over 2500 iterations of different agents, and safely deployed 235 of them to production across 41 live agents like WHOOP Coach or Day In Review.

The key word here is "safely." While AI Studio makes internal iteration quick and frictionless, going from idea to prototype in minutes, we haven't compromised on security or privacy when it comes to production. Every deployment goes through a built-in diff, approval, and deployment flow that ensures proper review of changes, adherence to our strict privacy policies, and validation of security guardrails. Most critically, the platform ensures that PII is never sent to model providers. This dual approach lets our teams move fast where it matters (experimentation and prototyping) while maintaining enterprise-grade security where it counts.

What Makes AI Studio Different

Traditional AI development requires deep technical expertise at every layer of the stack. With AI Studio, we've abstracted the complexity while preserving flexibility:

- Visual Agent Builder: Define your agent's system prompt, select a model, and configure tools, all through a clean web interface

- Integrated Testing Environment: Chat with your agent in real-time, debug interactions, and iterate on prompts without deploying anything

- One-Click Tool Access: Connect to WHOOP's data ecosystem through pre-built tools for fetching or writing to things like weekly plan, healthspan, and activities with no API wrangling required

- Built-in Evaluation Framework: Test agent performance systematically with our integrated eval system

The result has fundamentally changed how we think about AI at WHOOP. It's no longer a specialized capability reserved for our AI team—anyone from product managers to data scientists to health coaches can prototype agents in minutes and deploy production-ready versions within a day. In fact, with the entire development cycle now being no-code, our product team are quickly becoming our strongest prompt engineers (shoutout Anjali Ahuja, Mahek Modi, Alexi Coffey, Camerin Rawson!).

The Game Changer: Inline Tools

As we pushed deeper into making agent iteration ultra-fast, we invented something we call inline tools, a breakthrough that's transformed how we build agents.

Traditional agent architectures separate the prompt from tool invocations. The LLM has to explicitly decide when to call a tool, format the request correctly, wait for the response, and then continue. This creates latency, complexity, and countless edge cases.

Inline tools flip this approach. We've created a markup language that allows us to trigger agent tools directly inside the system prompt itself. Here's what this looks like in practice:

Current time: {{@tool1}}

Today's recovery score: {{@tool2}}

would become

Current time: <result of tool1>

Today's recovery score: <result of tool2>

When the agent loads, these inline tool calls execute in parallel, injecting real-time, personalized data directly into the context. The LLM doesn't need to "decide" to fetch this data. It's already there, reducing latency and making the interaction feel instantaneous.

This seemingly simple innovation has profound implications:

- Faster Response Times: No round-trip tool calls means near-instant responses

- Simpler Mental Model: Developers think about data as part of the prompt, not as external API calls

- Better Consistency: Data is always present in the expected format

- Easier Testing: Debug prompts with real data injected, not placeholder variables

The Compound Effect of Internal Tools

There's a broader lesson here about the compound value of internal developer tools. Every hour we invest in making AI Studio better pays dividends across every team, every agent, and every customer interaction. When you make something 10x easier, you don't just get 10x more of it. You unlock entirely new categories of innovation from people who couldn't participate before.

As LLMs continue to evolve and new models emerge, AI Studio ensures we can adopt them instantly. When a new model drops, every agent in our system can be upgraded with a dropdown selection. When we identify a new pattern that works well, it becomes a reusable component available to everyone.

This kind of leverage is now accessible to any team. Tools like Cursor and Claude Code have made it incredibly easy and low friction to spin up internal tools tailor-made to your company's stack and needs. The value compounds quickly: build once, benefit everywhere. At WHOOP, AI Studio has become the foundation for how we ship AI features—and it all started with asking what would make our own lives easier.

The Future is Already Here

While the tech world debates the future of AI agents, we're already living in it at WHOOP. Our members interact with AI agents dozens of times per day. They just experience them as helpful features, not "AI." Coach Chat provides personalized guidance. Daily Outlook surfaces insights from their data. Recovery recommendations adapt to their unique physiology.

Behind each of these experiences is an agent built in AI Studio, many of them created by people who had never worked with LLMs before. That's the real revolution: not the models themselves, but the platforms that make them accessible to everyone.

What's Next

What we've shared today is just the tip of the iceberg. The most powerful capabilities we've built into AI Studio are still under wraps—innovations that have fundamentally changed how we think about AI agents and what they're capable of.

Stay tuned. We'll be pulling back the curtain on these breakthroughs soon. And if you can't wait to see what we're building behind the scenes, join us!

Want to build the future of health and performance with AI? WHOOP is hiring engineers, product managers, and AI researchers who are passionate about using technology to unlock human performance.

Internships and Co-ops at WHOOP

Internships and Co-ops at WHOOP are more than working on temporary projects - they give students the chance to ship real work, explore new technologies, and see their impact firsthand. In the reflections that follow, two interns share their journeys: one as a college SWE intern building core product features, and another as a high school SWE intern who transformed an idea into a redesigned engineering blog. Together, their stories highlight how WHOOP empowers interns and co-ops of all backgrounds to learn, build, and belong.

What the heck is MCP?

MCP! It’s everywhere and it's confusing. In my opinion, the usual explanations of “it’s like a USB-C adapter” assumes a certain level of proficiency in this space. If, like me, you have been struggling to keep up with the AI firehose of information and hype, this article is for you. It is a gentle introduction to MCP and all things AI so you can understand some key terminologies and architectures.

Hack Week 2025 - Build with AI

Last June, we hosted our biggest biggest hackathon at WHOOP yet, and our first one focused on using AI. The event was open to the entire company: product managers, designers, operations, scientists, engineers, marketing, and more. The results were beyond what I could have imagined.

Agentic Coding Without Taking Down Prod

Agentic coding is on the rise, and the productivity gains from it are real. There are tons of success stories of non-programmers "vibe coding" SaaS apps to $50k MRR. But, there are also precautionary tales of those same SaaS apps leaking API keys, racking up unnecessary server costs, and growing into giant spaghetti code that even Cursor can't fix.